Pope Leo Warns Over AI As MIT Researcher Finds 90% Probability Of ‘Existential Threat’

In his first formal audience as the newly elected pontiff, Pope Leo XIV identified artificial intelligence (AI) as one of the most critical matters facing humanity.

“In our own day,” Pope Leo declared, “the church offers everyone the treasury of its social teaching in response to another industrial revolution and to developments in the field of artificial intelligence that pose new challenges for the defense of human dignity, justice and labor.” He linked this statement to the legacy of his namesake Leo XIII’s 1891 encyclical Rerum Novarum, which addressed workers’ rights and the moral dimensions of capitalism.

His remarks continued the direction charted by the late Pope Francis, who warned in his 2024 annual peace message that AI – lacking human values of compassion, mercy, morality and forgiveness – is too perilous to develop unchecked. Francis, who passed away on April 21, had called for an international treaty to regulate AI and insisted that the technology must remain “human-centric,” particularly in applications involving weapon systems or tools of governance.

‘Existential Threat’

As concern deepens within religious and ethical spheres, similar urgency is resonating from the scientific community.

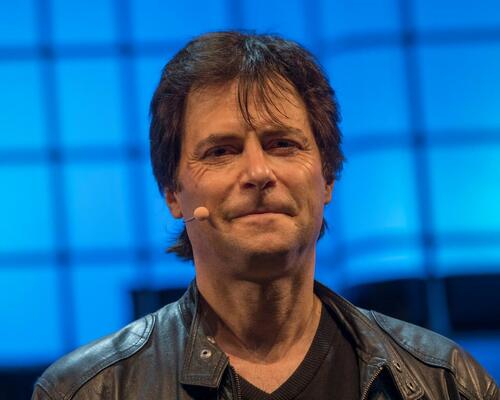

Max Tegmark, physicist and AI researcher at MIT, has drawn a sobering parallel between the dawn of the atomic age and the present-day race to develop artificial superintelligence (ASI). In a new paper co-authored with three MIT students, Tegmark introduced the concept of a “Compton constant” – a probabilistic estimate of whether ASI would escape human control. It’s named after physicist Arthur Compton, who famously calculated the risk of Earth’s atmosphere igniting from nuclear tests in the 1940s.

“The companies building super-intelligence need to also calculate the Compton constant, the probability that we will lose control over it,” Tegmark told The Guardian. “It’s not enough to say ‘we feel good about it’. They have to calculate the percentage.”

Tegmark has calculated a 90% probability that a highly advanced AI would pose an existential threat.

The paper urges AI companies to undertake a risk assessment as rigorous as that which preceded the first atomic bomb test, where Compton reportedly estimated the odds of a catastrophic chain reaction at “slightly less” than one in three million.

Tegmark, co-founder of the Future of Life Institute and a vocal advocate for AI safety, argues that calculating such probabilities can help build the “political will” for global safety regimes. He also co-authored the Singapore Consensus on Global AI Safety Research Priorities, alongside Yoshua Bengio and representatives from Google DeepMind and OpenAI. The report outlines three focal points for research: measuring AI’s real-world impact, specifying intended AI behavior, and ensuring consistent control over systems.

This renewed commitment to AI risk mitigation follows what Tegmark described as a setback at the recent AI Safety Summit in Paris, where U.S. Vice President JD Vance dismissed concerns by asserting that the AI future is “not going to be won by hand-wringing about safety.” Nevertheless, Tegmark noted a resurgence in cooperation: “It really feels the gloom from Paris has gone and international collaboration has come roaring back.”

Loading…